While we may all dream of a future where smart cars drive us from one place to another as we read a book or take a nap in the back seat, research is ongoing to get autonomous vehicles ready for the road.

Here, we highlight research undertaken using EuroHPC JU supercomputing resources that addresses some of the remaining technological challenges in creating the next generation of vehicles, including autonomous trucks, buses, cars, and “robotaxis”.

In this interview, EuroHPC JU talked to Dr Fatma Güney, from the Department of Computer Engineering at Koç University in Istanbul, about her research on computer vision and autonomous driving and the role of EuroHPC Joint Undertaking in accelerating her research.

In the last 5 years you created the Autonomous Vision Research Group to work at the cutting-edge of computer vision and self-driving vehicles. Can you share with us what you are working on right now?

Our research at the Autonomous Vision Group focuses on computer vision problems related to self-driving, including 3D vision, video understanding, and end-to-end learning of self-driving systems. We tackle these problems from both practical and theoretical angles. On the practical side, we study how large foundation models can be adapted for efficient use in self-driving, enabling vehicles to harness their powerful capabilities in real-time despite the computational demands. On the theoretical side, we investigate methods such as uncertainty estimation in driving scenes and advanced 3D representation learning. These efforts aim to make decision-making more cautious and reliable, particularly when the model faces ambiguous or uncertain situations.

Each colour in this GIF represents a slot that binds to an object and tracks it across the sequence. For example, orange follows the zebra on the right, while purple follows the one on the left.

Could you share a concrete example that brings together self-driving and computer vision?

In recent years, our work has built on a deep learning architecture known as the Transformer, which processes data as sequences of tokens. In natural language processing, for instance, tokens may correspond to words, phrases, or even individual characters. Our research focuses on identifying the most effective way to tokenise visual scenes for self-driving. Rather than representing an entire scene as a single entity or relying solely on object attributes such as position and size, we pursue an object-centric approach that captures richer structure and dynamics.

In our work, we employ the concept of slots, which serve as placeholders for individual objects (see video above). You can think of them as pigeonholes in a sorting system: each object in a scene is categorised into a slot so that computers can process it as it appears in real driving environments. For autonomous systems, this means every vehicle in a scene is assigned to a slot, and these slot representations act as tokens for a Transformer model. This approach captures richer information, such as an object’s relative position and motion with respect to others, resulting in improved predictions and a deeper understanding of scene dynamics. Ultimately, this research aims to make self-driving cars safer and more efficient by supporting reliable decision-making as vehicles interact with multiple moving agents and directions of travel.

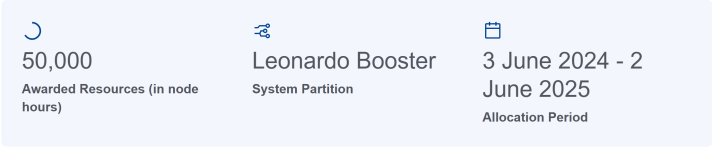

Your research team was awarded node hours on the EuroHPC JU supercomputer Leonardo. How has access to these resources helped you achieve your research goals?

High-performance computing (HPC) is critical to our research. Deep learning, especially in fields like computer vision and autonomous driving, demands significant computational resources. While companies competing in the same field often have access to thousands of GPUs, our local academic cluster provides only a few hundred GPUs shared among nearly 100 researchers. In this setting, even running a baseline model that requires 8 x A100 GPUs can be considered a luxury.

Access to Leonardo allowed two members of my team to train models using up to 32× A100 GPUs in parallel. This level of compute power made it possible for us to develop and validate more ambitious ideas at scale and led to two top-tier publications this year, one at the International Conference on Learning Representations (ICLR) and another at the International Conference on Computer Vision (ICCV). Without HPC resources like Leonardo, achieving this level of impact would have been significantly more difficult.

Example of video using Track On, a transformer-based model for online long-term point tracking.

Can you tell us more about how you utilise EuroHPC JU resources in your work and what have been the outcomes of your research projects so far?

Our research uses EuroHPC resources to tackle two key challenges in computer vision for autonomous systems (1) speeding up large AI models and (2) tracking visual information over time in real-world video.

In the first project, we looked at how to make powerful deep learning models fast enough for real-time decision-making in self-driving. Large models are typically too slow to use on every video frame, but small models lack accuracy. Our solution, called ETA (Efficiency through Thinking Ahead), combines both. The small model handles quick reactions, while the large one "thinks ahead" by processing earlier frames in batches. This shifts the heavy computation to the past, making it possible to use large models without slowing the system down. Thanks to EuroHPC JU, we could train this system at scale, which helped us publish our results at a top-tier conference, ICCV and deliver a state-of-the-art result on the CARLA Bench2Drive benchmark. To support the community of researchers working in this area, we also made our code and models available through GitHub.

In the second project, we tackled the problem of long-term online point tracking, following the same points in a video over time, even with changes in lighting, motion, or occlusion. We introduced Track-On, a lightweight transformer-based model that runs in real time and doesn’t need to look into the future. It relies on a memory mechanism to remember what it has seen and where, enabling accurate, frame-by-frame tracking.

Access to EuroHPC resources allowed us to train and evaluate this model across seven large datasets, setting a new benchmark for online tracking. It achieved competitive or state-of-the-art performance on seven benchmark datasets, including TAP-Vid, and was accepted to ICLR. The model, along with its training and evaluation framework, was made publicly available for real-time video understanding applications.

Both projects would not have been possible at this scale without EuroHPC JU and the resources they provide for free to researchers. Access to their supercomputers enabled us to train with large video datasets, run extensive experiments, and explore models that wouldn’t be feasible on our local cluster.

What advice would you give to other researchers about working with EuroHPC supercomputers like Leonardo?

I don’t work directly in HPC, but my team is enthusiastic and grateful users of it. In fields like deep learning, having access to large-scale computational resources is essential to stay competitive. I encourage all researchers not to shy away from applying for HPC resources and using them to push the boundaries of their work. After seeing the impact HPC had on our research, at least two of my colleagues, one based in Turkey and another in the Netherlands, were inspired to apply for EuroHPC JU resources themselves for other projects. It’s a powerful tool, and I hope more researchers in different fields will start to make full use of it.

Dr Fatma Güney is a computer scientist by training and began her academic journey at some of Turkey’s leading institutions. She completed her undergraduate studies at Bilkent University in Ankara and earned her Master’s degree at Boğaziçi University in Istanbul. She undertook her doctoral studies at the Max Planck Institute for Intelligent Systems in Germany, where she had the opportunity to work on autonomous driving under the supervision of Prof. Andreas Geiger. Dr Güney joined the Visual Geometry Group (VGG) at the University of Oxford as a postdoctoral researcher, working with Professors Andrew Zisserman and Andrea Vedaldi.

In 2019, she returned to Turkey to join Koç University as an Assistant Professor and, since then, has built up a research group, the Autonomous Vision Group. Their research lies at the intersection of computer vision and autonomous driving, and they have received funding from TUBITAK, the British Royal Society, and the European Research Council.