Most of us imagine a future where a robot can cook dinner, put on a wash, and maybe even pour us a drink. For that vision to become reality, robots must be trained to understand the movements, actions, tools and step-by-step procedures that are part of everyday tasks for humans. Artificial intelligence can contribute to research in this direction by processing raw visual data at scale.

In this EuroHPC success story, researchers automatically annotated more than one million YouTube videos, opening the possibility of training robots directly from demonstrations. Dr. Evangelos Kazakos, Dr. Cordelia Schmid and Dr. Josef Sivic developed a grounded video captioning method (GROVE) that links language to specific objects and regions in each video. This framework can be used in a variety of contexts and provides a powerful foundation for pre-training models on large-scale web videos.

Learn by watching 1 million YouTube videos

In this project, the research team proposed an approach that can perform Grounded Video Caption Generation. This began with a novel automatic annotation method which was used to label 1 million YouTube videos. The videos showed people completing everyday tasks such as cooking a meal, sweeping the floor or doing arts and crafts.

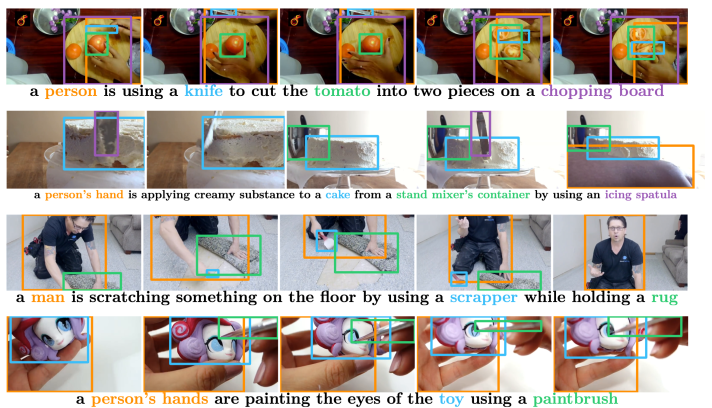

Examples of automatically annotated videos are shown in Figure 1. In each task, the machine identifies key elements in the activities in the video. The research team also created a set of human-labelled videos (Figure 2). This was used for accurate evaluation of how the model was performing. The proposed datasets from this research are publicly available online.

The second milestone of the research team was the introduction of a state-of-the-art method for grounded video caption generation. The method combines a Large Language Model (LLM) and two Vision-Language Models (VLMs), that collaborate to carry out 3 specific tasks:

First, a VLM generates a “visual summary” of the main activities in the video, which has the form of a mathematical object that the LLM can understand. Using this “visual summary”, the LLM generates a short caption in natural language, describing what is happening in the video, e.g. “A man is adding ingredients from a bowl and cup into a jar to mix them well” (Figure 3).

Next, the LLM identifies the noun phrases in the caption, which are essentially the key elements in the video. In the example below (Figure 4), these are the man, the bowl, the cup and the jar.

In the final step, a second VLM predicts bounding boxes over the video frames, linking each noun phrase in the caption to the corresponding object in the scene (Figure 5). This makes the caption clearly tied to where objects are and how they move through space.

Through each step, the model gains a better understanding of the video, obtaining the ability to not only recognise what is in the video, but also to point out these entities and make more accurate predictions and explanations of the actions taking place – a great example of machine learning!

The developed machine learning method was accepted to ICCV 2025 – the IEEE/CVF International Conference on Computer Vision. ICCV is one of the most influential conferences in computer vision (along with CVPR and ECCV) and is consistently ranked among the top venues in computer science and artificial intelligence.

Hardware and Hard Work

EuroHPC JU supercomputing resources were used at two main stages of the project:

(i) large-scale automatic annotation to build the HowToGround1M dataset, and

(ii) model development, training and evaluation.

For the task of automatic annotation, access to the LUMI supercomputer was crucial, as the project pipeline required per-frame captioning and object detection over millions of video frames. By parallelising the workload and splitting videos across many compute nodes and GPUs, the researchers were able to run visual foundation models and LLMs in parallel and complete pseudo-labelling in a reasonable timeframe.

The vLLM library played a key role here: it enabled high-throughput and memory-efficient LLM inference with techniques like continuous batching, PagedAttention, and model parallelism, which allowed the research team to feed the LLM with multiple inputs from multiple video frames and scale up to billions of tokens across millions of videos on the supercomputer.

When the team began working on model development, they used EuroHPC JU resources to train and evaluate their GROVE architectures on the large-scale video–language data created in stage one. The entire stack was implemented in Python, using PyTorch as the main deep learning framework and the Hugging Face transformers library for LLM/VLM components.

Access to LUMI enabled the team to run large-scale pseudo-labelling with vLLM and to perform memory and compute-intensive multi-node training with DeepSpeed and PyTorch, which would not have been feasible on conventional hardware.

Overall, this extensive research project made some important observations:

- The volume of the automatically annotated data was crucial for obtaining good performance.

- The human-labelled data provided significant boosts in the model’s ability to ground objects in the video.

- The proposed model outperformed all previous strong approaches on five different video grounding benchmarks.

Real Potential

Research outputs from this project have impact beyond the core grounded captioning task. The data and models produced in the project are already being applied to new research areas including 3D object pose estimation and a project transferring human trajectories to robotic trajectories; where large-scale grounded visual data is currently a key bottleneck. Another project is focused on building a robotic manipulation model that leverages visual grounding to improve object understanding, localisation and manipulation.

Finally, the team is now working towards extending their model to cover temporal grounding and language-guided understanding of object state changes. This direction is particularly relevant for long-horizon robotic tasks where understanding how objects evolve over time is essential. As an example, imagine a robot helping to prepare dinner by chopping a carrot. After cutting, the “carrot” is no longer a single object but a pile of slices. The robot needs to understand that all these pieces are the same carrot in a new state, and to be able to find, pick up, and move them on to the next part of the task—even though their shape and appearance have changed dramatically.

The potential for innovation doesn’t stop there!

The ideas and tools developed in this project can benefit applications such as autonomous driving: a driver could verbally refer to an event or object on the road, and the system could ground this reference in the scene and, in turn, point to specific locations or objects to explain its decisions and planned actions. In this way, this research contributes not only new datasets and models, but also building blocks for more transparent, interactive and capable vision–language systems in robotics and intelligent vehicles.

In the longer term, such models and datasets can underpin assistive robots operating in homes or hospitals, for example supporting elderly or disabled individuals with everyday activities such as preparing food, tidying up, or handling medical equipment, where robots must interpret verbal instructions, ground them in complex, dynamic scenes, and understand how objects change over time. The grounding and object state-change understanding developed in this project can also support robots sorting and handling packages in logistics centres or picking fruits in agriculture. In all these settings, it is crucial that robots can link spoken instructions to the right places and objects in the real world and show what they plan to do by clearly pointing to specific items or areas. This grounding of both instructions and intentions can contribute to safer, more reliable and more helpful human–robot interaction in everyday and clinical environments.

The team intends to apply to the EuroHPC JU AI for Science and Collaborative EU Projects access to extend their current work towards 4D Understanding with Vision & Language. This new project will develop large-scale multimodal models that learn rich, explicit representations of 3D space, objects, and their temporal interactions from video, language, and 3D signals, moving beyond describing “what happens” to reasoning about where things are, how far apart they are, how they move, and how they can be manipulated. Using EuroHPC resources, they aim to train and evaluate foundation-style vision-language models that integrate 2D videos, textual descriptions, and 3D data (including simulated 3D environments, to overcome the cost of large-scale 3D annotation), and that can generalise across domains such as robotic manipulation, scene understanding, and remote sensing, ultimately providing a reusable core for spatial reasoning in diverse scientific workflows.

All of this important research means that dream of sipping a drink prepared by your home-robot is hopefully not so far away!

Meet the Research Team

Evangelos Kazakos is a postdoctoral researcher at the Czech Institute of Informatics, Robotics and Cybernetics (CIIRC) at the Czech Technical University in Prague (CTU). He is a member of the IMPACT group led by Josef Sivic. His current research interests lie in vision-language understanding, multi-modal learning, foundation models as well as vision-language-action models for robotics. He is particularly interested in automatic data generation methods as well as in bridging the gap between vision and robotics. Previously, he was a research scientist at Samsung AI Center in Cambridge working in vision & language. He obtained his PhD from University of Bristol where his thesis was on Audio-Visual Egocentric Action Recognition. He has been part of a large-scale data collection effort, EPIC-KITCHENS, which has been fundamental for video understanding, and has since contributed to multiple data collection projects.

Cordelia Schmid is a Research Director at INRIA. Dr. Schmid is a member of the German National Academy of Sciences, Leopoldina and a fellow of IEEE and the ELLIS society. She was awarded the Longuet-Higgins Prize in 2006, 2014 and 2016, the Koenderink Prize in 2018 and the Helmholtz prize in 2023, all for her fundamental contributions in computer vision that have withstood the test of time. She received an ERC Advanced Grant in 2013, the Humboldt Research Award in 2015, the INRIA and French Academy of Science Grand Prix in 2016, the Royal Society Milner award in 2020 and the PAMI Distinguished Researcher award in 2021. In 2023 she received the Körber European Science Prize, in 2024 the European Inventor Award in the research category and in 2025 the ACM Athena Lecturer Award, the Hans Fischer Senior TUM Fellowship and the Archimedes Science Award. Dr. Schmid has been an Associate Editor for IEEE PAMI (2001-2005) and for IJCV (2004-2012), an Editor-in-Chief for IJCV (2013-2018), a Program Chair of IEEE CVPR 2005 and ECCV 2012 as well as a general chair of IEEE CVPR 2015, ECCV 2020 and ICCV 2023. Since 2018 she holds a joint appointment with Google Research.

Josef Sivic holds a distinguished researcher position at the Czech Institute of Robotics, Informatics and Cybernetics (CIIRC) at the Czech Technical University in Prague, where he heads the Intelligent Machine Perception team and the ELLIS Unit Prague. He received the habilitation degree from Ecole Normale Superieure in Paris and PhD from the University of Oxford. After PhD, he was a post-doctoral associate at the Computer Science and Artificial Intelligence Laboratory at the Massachusetts Institute of Technology and then spent more than 10 years at Inria Paris where he received an ERC Starting Grant. He was awarded the British Machine Vision Association Sullivan Thesis Prize, three test-of-time awards at major computer vision conferences, and, in 2023, an ERC Advanced Grant. From 2019 to 2025 he served on the board of the European Laboratory of Learning and Intelligent Systems (ELLIS).